NIPS-2011 Satellite Meeting on

CAUSAL GRAPHS: LINKING BRAIN STRUCTURE TO FUNCTION

Dec 11, 2011. Carmen de la Victoria, Granada

Single-day workshop funded by CEI BIOTIC and GENIL, grant IMS-2010-1

Organizers Institutions in Granada University:

Departamento de Ciencias de la Computacion e Inteligencia Artificial (Jesus M Cortes) and

Institute Carlos I for Theoretical and Computational Physics (Miguel A Munoz)

The Workshop “Causal Graphs: Linking Brain Structure to Function” is over and took place in Granada, one day before NIPS 2011 started. This single-day workshop was hold on Dec 11 at the Carmen de la Victoria in the Granada area of the Albaycin.

The workshop discussed recent investigations combining causality measures and network analysis methods in Neuroscience. The main goal was to create cross-talk and encourage a dialogue between these research fields, discussing potential incompatibilities, advantages and disadvantages of one approach over the other to address important neuroscientifc questions.

Single-page Program is available

Talks Abstracts are available

Organizers Chairs:

Jesus Cortes (Granada University, Spain)

Andrea Greve (Cambridge University, UK)

Daniele Marinazzo (Ghent University, Belgium)

Miguel Angel Muñoz (Granada University, Spain)

Sebastiano Stramaglia (Bari University, Italy)

Confirmed Key Speakers:

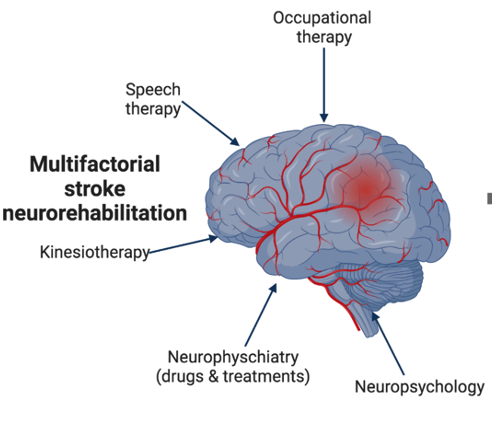

Anil Seth (Sussex University, UK). CAUSALITY IN NEUROSCIENCE

For decades, the main ways to study the effect of one part of the nervous system upon another have been either to stimulate or lesion the first part and investigate the outcome in the second. A fundamentally different approach to identifying causal connectivity in neuroscience is provided by a focus on the predictability of ongoing activity in one part from that in another. This approach has been made possible by the pioneering work of Wiener (1956) and Granger (1969). The Wiener–Granger method, unlike stimulation and ablation, does not require direct intervention in the nervous system. Rather, it relies on the estimation of causal statistical influences between simultaneously recorded neural time series data, either in the absence of identifiable behavioral events or in the context of task performance. Causality in the Wiener–Granger sense is based on the statistical predictability of one time series that derives from knowledge of one or more others. I will define and illustrate Wiener-Granger causality (WGC) with a pragmatic focus on application to neural time-series data, including electroencephalographic and local field-potential signals, spike trains, and functional MRI signals. I will compare the method with contrasting approaches such as ‘dynamic causal modelling’. Returning to theory, I will introduce some novel network-level concepts based on WGC including multivariate causality, causal density, Granger-autonomy, and Granger-emergence, and I will try to show how these concepts can shed new light on multi-scale neural causality. I will finish with some thoughts about what one should require of a measure of causality, in the specific context of the relation between network structure and network dynamics.

Stefano Panzeri (IIT, Italy). CAUSALITY ANALYSIS OF INFORMATION FLOW FROM CORTICAL TIME SERIES

Work by Michel Besserve, Nikos K Logothetis, Bernhard Schoelkopf and Stefano Panzeri. I will describe an approach that we developed for a robust estimation of transfer entropy from time series of simultaneously recorded extracellular cortical recordings. I will also describe the application of these methods to evalaute the role of synchronization of neural activity in the gamma (40-100 Hz) frequency range in the spatial propagation of cortical information.

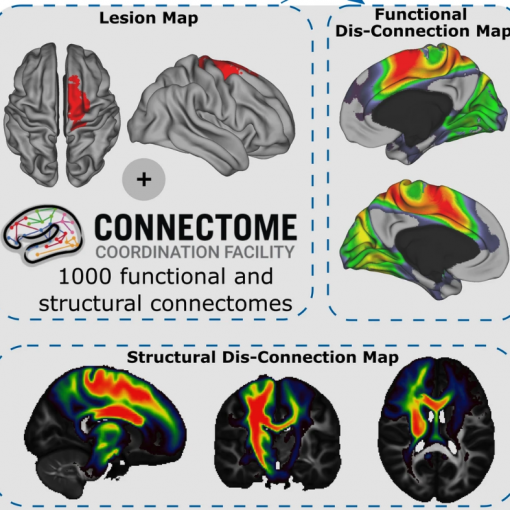

Marcus Kaiser (Newcastle University, UK). THE HUMAN CONNECTOME

The human brain consists of connections between neurons at the local level and of connections between brain regions at the global level (Kaiserr, 2011) . The study of the entire network, the connectome, has become a recent focus in neuroscience research. Using routines from physics and the social sciences, neuronal networks were found to show properties of scale-free networks, making them robust towards random damage, and of small-world systems leading to better information integration. First, I will describe novel results concerning the hierarchical and modular organisation of neural networks. Second, I will report on the role of hierarchical modularity on activity spreading. Importantly, low connectivity between modules can provide bottlenecks for activity spreading. Limiting activity spreading is crucial for preventing epileptic seizures. Indeed, connectivity between modules is increased in epilepsy patients which could explain the rise of large-scale synchronization. Finally, unlike other networks, the nodes and edges of brain networks are organized in three-dimensional space. This organisation is non-optimal concerning wiring length minimization but more optimal concerning the reduction of path lengths and thus delays for signal propagation (Kaiser & Hilgetag, 2006). Together, these findings indicate that multiple constraints drive brain evolution leading to non-optimal solutions when only single factors are observed. I will discuss how these structural properties influence neural dynamics and how they arise during evolution and individual brain development (Varier & Kaiser, 2011).

Bert Kappen (Radboud University Nijmegen, The Netherlands). THE VARIATIONAL GARROTE

In this talk, I present a new solution method for sparse regression using $L_0$ regularization. The model introduces variational selector variables $s_i$ for the features $i$ and can be viewed as an alternative parametrization of the spike and slab model. The posterior probability for $s_i$ is computed in the variational approximation. The variational parameters appear in the approximate model in a way that is similar to Breiman’s Garrote model. I refer to this method as the variational Garrote (VG). The VG is compared numerically with the Lasso method and with ridge regression. Numerical results on synthetic data show that the VG yields more accurate predictions and more accurately reconstructs the true model than the other methods. The naive implementation of the VG scales cubic with the number of features. By introducing Lagrange multipliers we obtain a dual formulation of the problem that scales cubic in the number of samples, which is more efficient large applications where the number of samples is smaller than the number of features.

Volunteers helping with organization (all at Granada University):

Juan Jose Segura Gonzalez

Daniel Bellido Perez

Asier Erramuzpe Aliaga

Gema Roman Lopez

Samuel Sanchez Talavera

More Information about the place